Navigating The Landscape Of RSW: A Comprehensive Guide

Navigating the Landscape of RSW: A Comprehensive Guide

Related Articles: Navigating the Landscape of RSW: A Comprehensive Guide

Introduction

With great pleasure, we will explore the intriguing topic related to Navigating the Landscape of RSW: A Comprehensive Guide. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Navigating the Landscape of RSW: A Comprehensive Guide

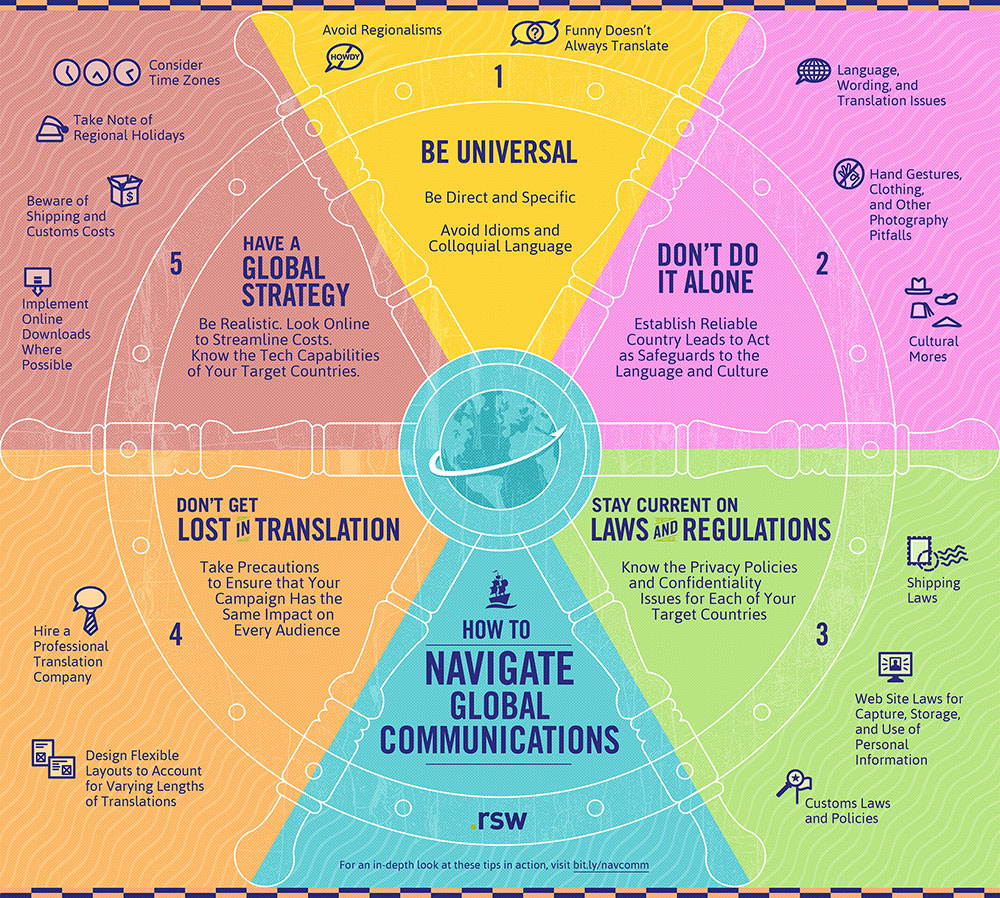

The term "RSW" can hold diverse meanings depending on the context. In this article, we will explore the significance of RSW in the realm of data analysis, specifically focusing on its application in the field of machine learning. RSW, in this context, stands for "Random Sampling with Weights," a technique employed to construct representative samples from large datasets, particularly when dealing with imbalanced data distributions.

Understanding the Importance of RSW

Data imbalance, where certain classes or categories are significantly overrepresented compared to others, poses a major challenge in machine learning. This imbalance can lead to biased models that favor the majority class, hindering the model’s ability to accurately predict outcomes for the minority class. RSW emerges as a powerful tool to mitigate this challenge by ensuring that the training data reflects the true distribution of the target population.

How RSW Works: A Step-by-Step Explanation

-

Identifying the Imbalance: The first step involves identifying the extent of the imbalance in the dataset. This can be achieved by calculating the ratio of instances belonging to different classes.

-

Assigning Weights: Based on the identified imbalance, weights are assigned to each instance in the dataset. Instances belonging to the minority class are assigned higher weights, while instances belonging to the majority class receive lower weights.

-

Random Sampling with Weights: Random sampling is then performed on the weighted dataset. This means that instances with higher weights have a greater probability of being selected for the sample.

-

Creating a Balanced Subset: By employing random sampling with weights, a balanced subset of the data is created, ensuring that the minority class is adequately represented in the training data.

Benefits of RSW

-

Reduced Bias: RSW helps mitigate the bias introduced by imbalanced data, leading to more accurate and reliable machine learning models.

-

Improved Model Performance: By providing a more balanced training set, RSW enables the model to learn from the data more effectively, resulting in improved performance metrics such as accuracy and precision.

-

Enhanced Generalizability: Models trained on balanced data tend to generalize better to unseen data, making them more robust and applicable to real-world scenarios.

-

Cost-Effectiveness: RSW is a computationally efficient technique, requiring minimal computational resources compared to other methods for handling imbalanced data.

FAQs on RSW

Q1: What are the different types of weights that can be used in RSW?

A: Commonly used weights include:

- Inverse Class Frequency Weights: These weights are inversely proportional to the frequency of each class.

- Over-sampling Weights: These weights are assigned to instances based on the desired oversampling ratio for the minority class.

- Cost-Sensitive Weights: These weights are assigned based on the cost of misclassifying instances from different classes.

Q2: How does RSW compare to other methods for handling imbalanced data?

A: RSW offers a simpler and more computationally efficient approach compared to other methods such as oversampling, undersampling, and synthetic minority oversampling technique (SMOTE).

Q3: Is RSW applicable to all types of machine learning models?

A: RSW is applicable to a wide range of machine learning models, including classification, regression, and clustering algorithms.

Q4: What are the limitations of RSW?

A: While RSW is a powerful technique, it has certain limitations:

- Overfitting: If the weights are not carefully assigned, RSW can lead to overfitting, where the model performs well on the training data but poorly on unseen data.

- Loss of Information: Undersampling, a related technique, can lead to loss of information from the majority class.

Tips for Implementing RSW

- Experiment with Different Weights: Explore different weighting schemes to find the optimal balance for your specific dataset.

- Monitor Model Performance: Regularly evaluate the performance of your model on both the training and validation sets to ensure that the model is not overfitting.

- Consider Other Techniques: In some cases, combining RSW with other methods, such as SMOTE, can further enhance model performance.

Conclusion

RSW emerges as a valuable tool for addressing the challenge of data imbalance in machine learning. By effectively balancing the training data, RSW contributes to building more accurate, reliable, and generalizable models. Its simplicity, computational efficiency, and wide applicability make it a popular choice for handling imbalanced data in various machine learning applications.

Closure

Thus, we hope this article has provided valuable insights into Navigating the Landscape of RSW: A Comprehensive Guide. We appreciate your attention to our article. See you in our next article!